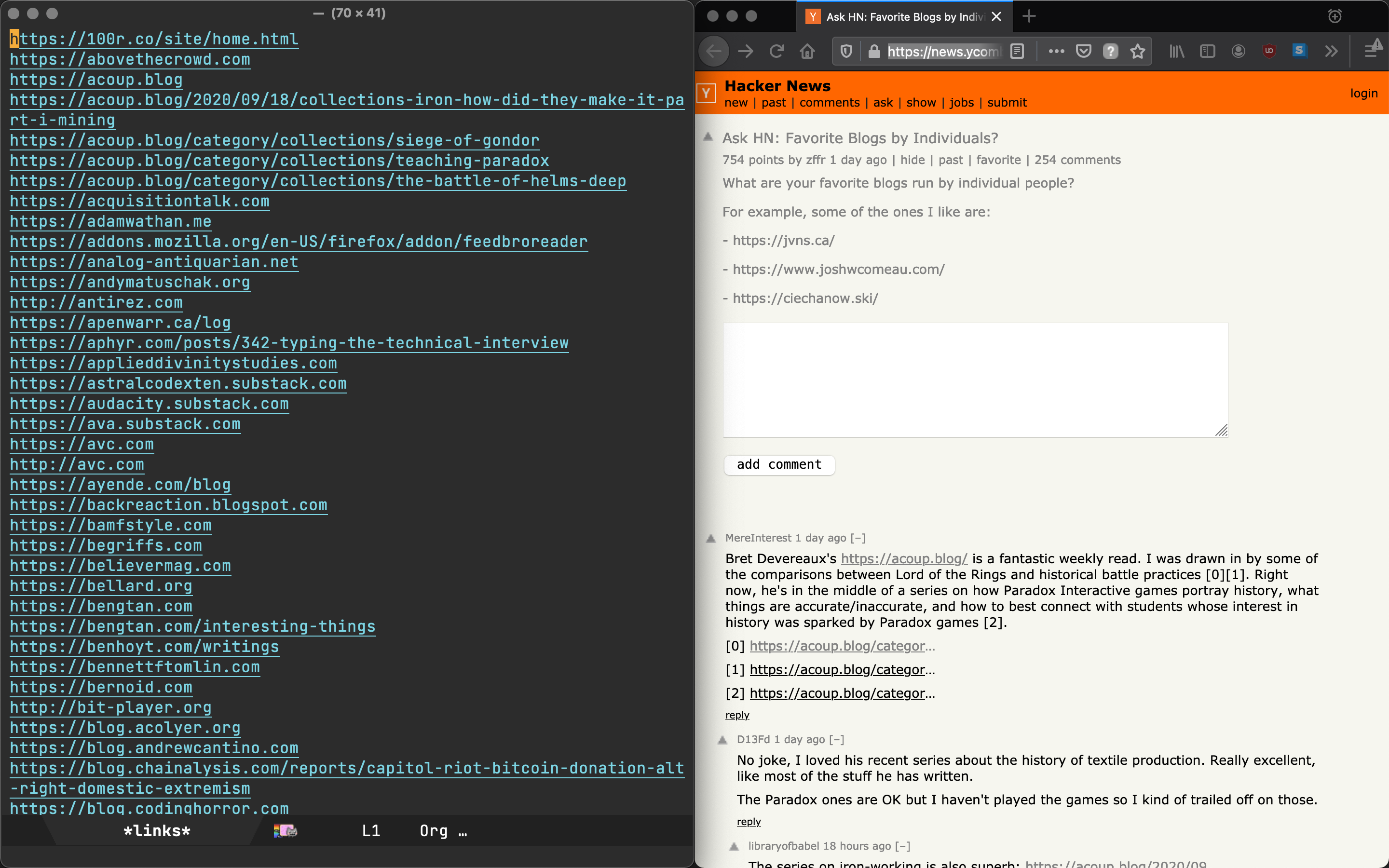

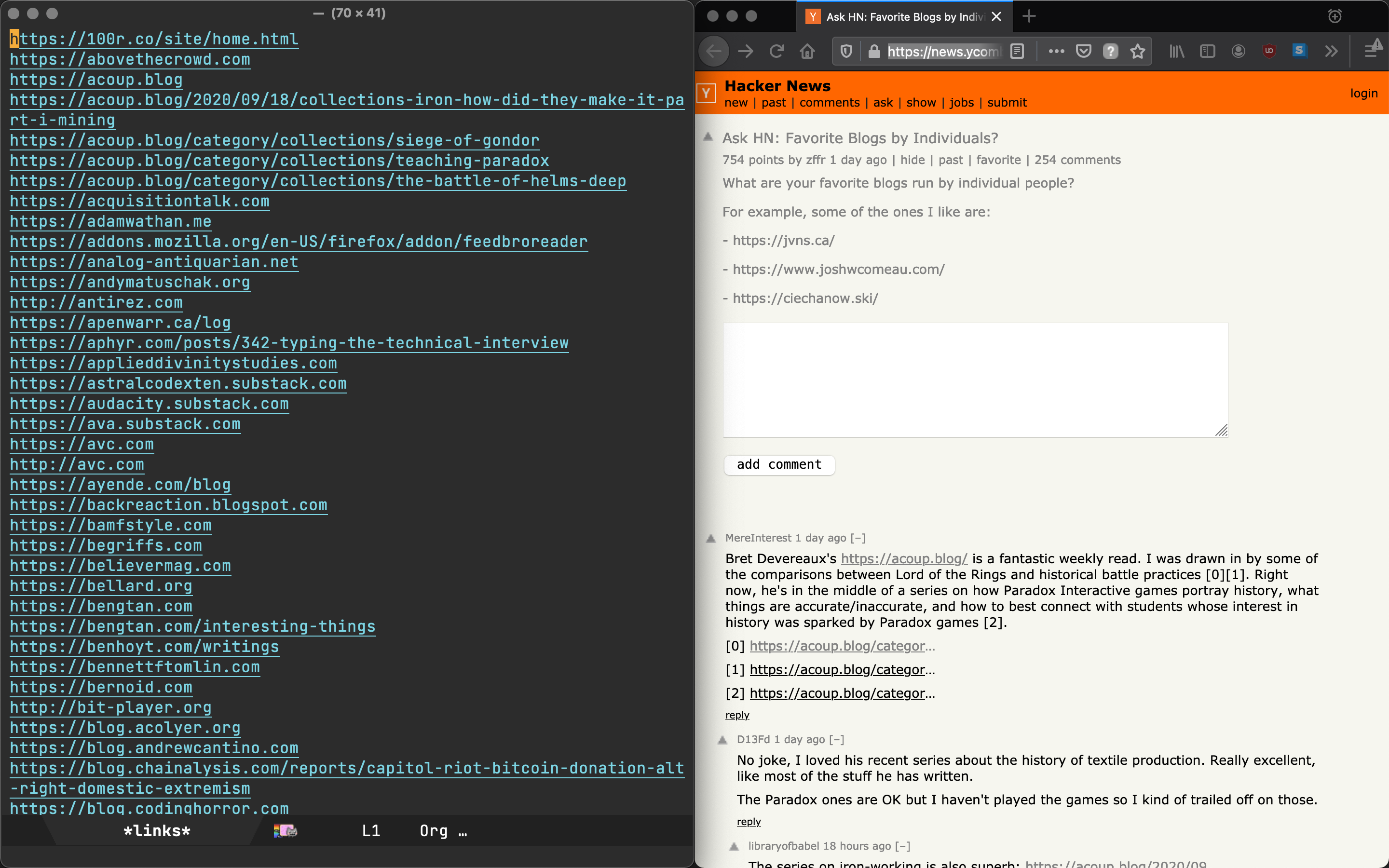

A recent Hacker News post, Ask HN: Favorite Blogs by Individuals, led me to dust off my oldie but trusty command to extract comment links. I use it to dissect these wonderful references more effectively.

You see, I wrote this command back in 2015. We can likely revisit and improve. The enlive package continues to do a fine job fetching, parsing, and querying HTML. Let's improve my code instead… we can shed a few redundant bits and maybe use newer libraries and features.

Most importantly, let's improve the user experience by sanitizing and filtering URLs a little better.

We start by writing a function that looks for a URL in the clipboard and subsequently fetches, parses, and extracts all links found in the target page.

(require 'enlive)

(require 'seq)

(defun ar/scrape-links-from-clipboard-url ()

"Scrape links from clipboard URL and return as a list. Fails if no URL in clipboard."

(unless (string-prefix-p "http" (current-kill 0))

(user-error "no URL in clipboard"))

(thread-last (enlive-query-all (enlive-fetch (current-kill 0)) [a])

(mapcar (lambda (element)

(string-remove-suffix "/" (enlive-attr element 'href))))

(seq-filter (lambda (link)

(string-prefix-p "http" link)))

(seq-uniq)

(seq-sort (lambda (l1 l2)

(string-lessp (replace-regexp-in-string "^http\\(s\\)*://" "" l1)

(replace-regexp-in-string "^http\\(s\\)*://" "" l2))))))

Let's chat (current-kill 0) for a sec. No improvement from my previous usage, but let's just say building interactive commands that work with your current clipboard (or kill ring in Emacs terminology) is super handy (see clone git repo from clipboard).

Moving on to sanitizing and filtering URLs… Links often have trailing slashes. Let's flush them. string-remove-suffix to the rescue. This and other handy string-manipulating functions are built into Emacs since 24.4 as part of subr-x.el.

Next, we can keep http(s) links and ditch everything else. The end-goal is to extract links posted by users, so these are typically fully qualified external URLs. seq-filter steps up to the task, included in Emacs since 25.1 as part of the seq.el family. We remove duplicate links using seq-uniq and sort them via seq-sort. All part of the same package.

When sorting, we could straight up use seq-sort and string-lessp and nothing else, but it would separate http and https links. Let's not do that, so we drop http(s) prior to comparing strings in seq-sort's predicate. replace-regexp-in-string does the job here, but if you'd like to skip regular expressions, string-remove-prefix works just as well.

Yay, sorting no longer cares about http vs https:

https://andymatuschak.org

http://antirez.com

https://apenwarr.ca/log

...

With all that in mind, let's flatten list processing using thread-last. This isn't strictly necessary, but since this is the 2021 edition, we'll throw in this macro added to Emacs in 2016 as part of 25.1. Arthur Malabarba has a great post on thread-last.

Now that we've built out ar/scrape-links-from-clipboard-url function, let's make its content consumable!

This is the 2021 edition, so power up your completion framework du jour and feed the output of ar/scrape-links-from-clipboard-url to our completion robots…

I'm heavily vested in ivy, but since we're using the built-in completing-read function, any completion framework like vertico, selectrum, helm, or ido should kick right in to give you extra powers.

(defun ar/view-completing-links-at-clipboard-url ()

"Scrape links from clipboard URL and open all in external browser."

(interactive)

(browse-url (completing-read "links: "

(ar/scrape-links-from-clipboard-url))))

Sometimes you just want to open every link posted in the comments and use your browser to discard, closing tabs as needed. The recent HN news instance wasn't one of these cases, with a whopping 398 links returned by our ar/scrape-links-from-clipboard-url.

Note: I capped the results to 5 in this gif/demo to prevent a Firefox tragedy (see seq-take).

In a case like Hacker News's, we don't want to surprise-attack the user and bomb their browser by opening a gazillion tabs, so let's give a little heads-up using y-or-n-p.

(defun ar/browse-links-at-clipboard-url ()

(interactive)

(let ((links (ar/scrape-links-from-clipboard-url)))

(when (y-or-n-p (format "Open all %d links? " (length links)))

(mapc (lambda (link)

(browse-url link))

links))))

My 2015 solution leveraged an org mode buffer to dump the fetched links. The org way is still my favorite. You can use whatever existing Emacs super powers you already have on top of the org buffer, including searching and filtering fueled by your favourite completion framework. I'm a fan of Oleh's swiper.

The 2021 implementation is mostly a tidy-up, removing some cruft, but also uses our new ar/scrape-links-from-clipboard-url function to filter and sort accordingly.

(require 'org)

(defun ar/view-links-at-clipboard-url ()

"Scrape links from clipboard URL and dump to an org buffer."

(interactive)

(with-current-buffer (get-buffer-create "*links*")

(org-mode)

(erase-buffer)

(mapc (lambda (link)

(insert (org-make-link-string link) "\n"))

(ar/scrape-links-from-clipboard-url))

(goto-char (point-min))

(switch-to-buffer (current-buffer))))

To power our 2021 link scraper, we've used newer libraries included in more recent versions of Emacs, leveraged an older but solid HTML-parsing package, pulled in org mode (the epicenter of Emacs note-taking), dragged in our favorite completion framework, and tickled our handy browser all by smothering the lot with some elisp glue to make Emacs do exactly what we want. Emacs does rock.